Note

Go to the end to download the full example code

Introduction to Temporal Response Functions (TRFs)

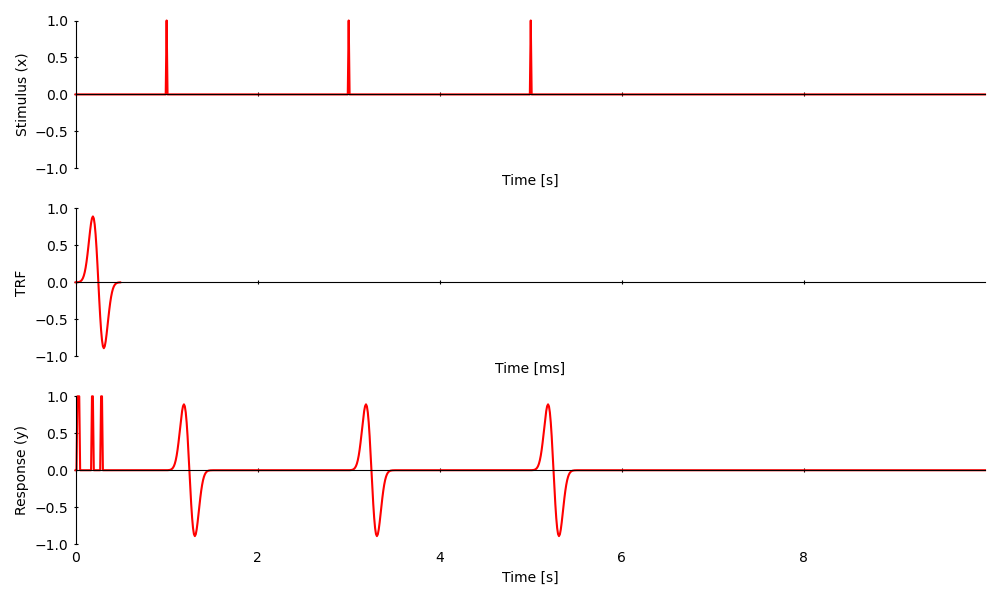

A temporal response functions (TRFs) is a linear model of the stimulus-response depency. The response is predicted by a linear convolution of the stimulus with the TRF. This means that for every non-zero element in the stimulus, there will be a response in the shape of the TRF:

from eelbrain import *

import numpy as np

# Construct a 10 s long stimulus

time = UTS(0, 0.01, 1000)

x = NDVar(np.zeros(len(time)), time)

# add a few impulses

x[1] = 1

x[3] = 1

x[5] = 1

# Construct a TRF of length 500 ms

trf_time = UTS(0, 0.01, 50)

trf = gaussian(0.200, 0.050, trf_time) - gaussian(0.300, 0.050, trf_time)

# The response is the convolution of the stimulus with the TRF

y = convolve(trf, x)

plot_args = dict(columns=1, axh=2, w=10, frame='t', legend=False, colors='r')

plot.UTS([x, trf, y], ylabel=['Stimulus (x)', 'TRF', 'Response (y)'], **plot_args)

<UTS: None>

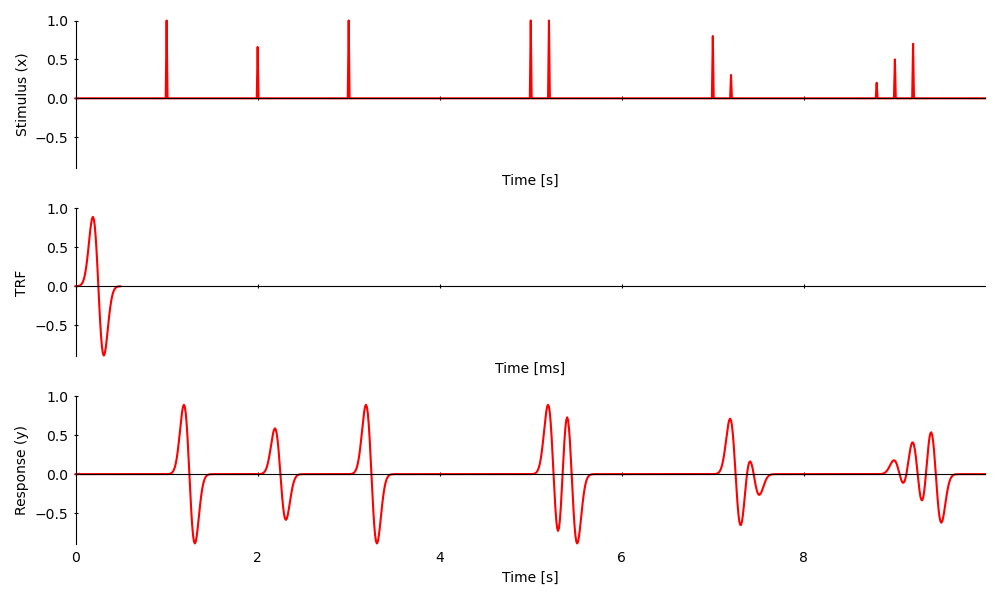

Since The convolution is linear,

scaled stimuli cause scaled responses

overlapping responses add up

<UTS: None>

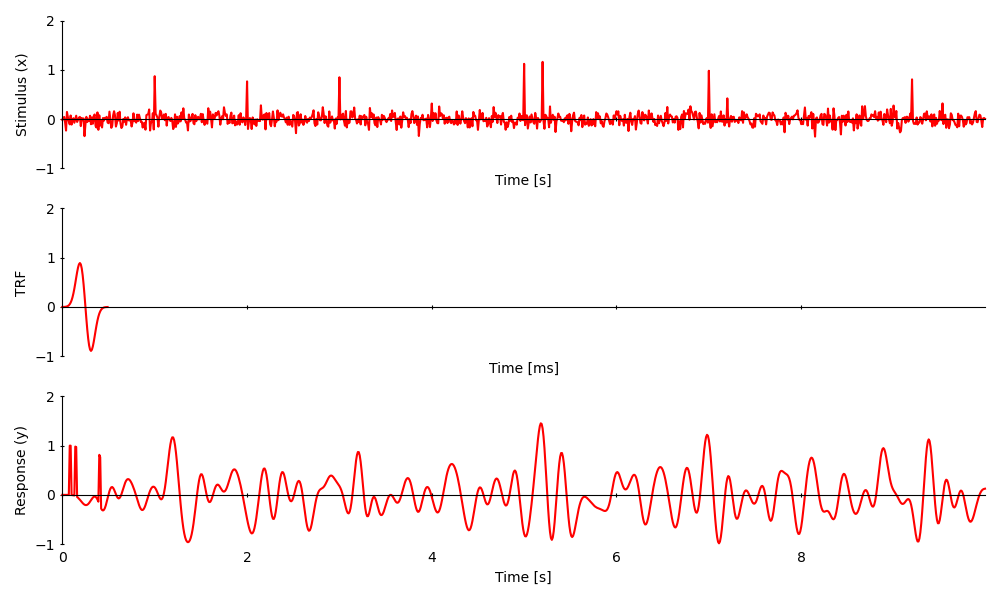

When the stimulus contains only non-zero elements this works just the same, but the TRF might not be apparent in the response anymore:

<UTS: None>

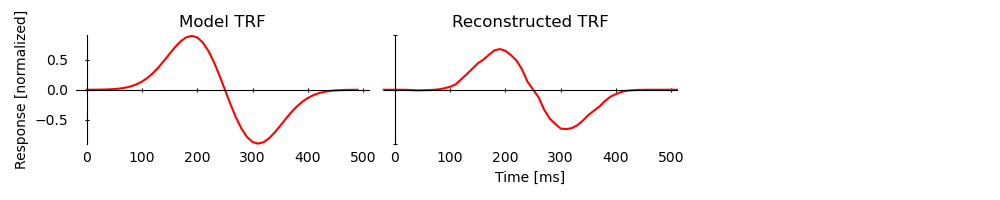

Given a stimulus and a response, there are different methods to reconstruct

the TRF. Eelbrain comes with an implementation of the boosting()

coordinate descent algorithm:

<UTS: None>